Decades of well-funded machine learning and artificial intelligence R&D is starting to make its mark on the world of machine translation. Over the last few months there have been several important announcements from the likes of Google, Facebook, and Microsoft about their initiatives in applying artificial intelligence (AI) technologies to translation. For example:

- On April 28 Google’s US patent application “Neural Machine Translation Systems With Rare Word Processing” was published. Google is claiming exclusive rights to an NMT system made specific by how the system is implemented.[1]

- Facebook, which serves up two billion translations each day,[3] has begun the transition away from the Bing translation system to a new neural network system to be fully deployed by the end of this year[2].

- In February Microsoft revealed that they are leveraging AI―including deep learning―for their own Translator Hub.[4]

So what is Neural Machine Translation?!

Current phrase-based machine translation systems consist of many subcomponents that are optimized separately. Neural machine translation is a novel approach in which a single, large neural network is trained, maximizing translation performance.[5] In the Google patent application mentioned above a neural MT system is defined as “one that includes any neural network that maps a source natural language sentence in one natural language to a target sentence in a different natural language.”

Neural networks are a deep learning technology that simulates the densely interconnected network of neuron brain cells that allow us to learn, recognize patterns, solve problems and make decisions.

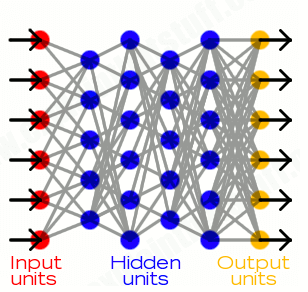

A fully connected artificial neural network is made up of input units (red), hidden units (blue), and output units (yellow), with all the units connected to all the units in the layers either side. Each connection is assigned a “weight”, which indicates the strength of

the connection between the units.

Inputs, which are fed in from the left, activate the most relevant hidden units in the middle, which then activate the appropriate output units to the right.

Neural networks are trained by comparing the output produced with the output it was meant to produce, and using the difference between them to modify the weights of the connections between the units in the network.[6]

Neural Machine Translation systems are far more complex and adaptive than statistically trained models. Once trained, a translation neural network has contextual awareness and deep learning capabilities that allow it to understand the domain in which a conversation is taking place and the linguistic norms of that environment.[2]

The Promise of NMT

NMT is still in its infancy and transforming it into a truly robust platform will be a highly collaborative effort. Google may end up holding a seminal patent but as Language Weaver co-Founder Marcu notes, “There are hundreds of statistical-MT-related patents. Getting statistical MT to work required hundreds of innovations. Neural MT is no different. It will require hundreds of innovations and patents to get MT to the next level of accuracy.”[1]

To this end, it is encouraging to note that last year Facebook open-sourced Torch, its deep learning library, and, later in the year, it open-sourced its AI server Big Sur. In November, Google opened its TensorFlow machine learning library for public use. Amazon released its deep learning software DSSTNE last month.[8]

In addition, watch out for hardware advances that will further enhance the performance of neural networks in general, and NMT in particular. At the end of May the startup company Nervana announced that, in cooperation with Google, it is designing and building a custom ASIC processor for neural networks and machine learning applications that will run 10 times faster than the graphic processor units (GPU) that are typically used today for neural network computations.

As NMT takes machine translation to the next level, human translators will have a powerful partner in the quest to achieve and maintain the highest levels of quality in the face of ever-growing quantities of content to be translated.

References

[1] Marion Marking, Google Applies for Neural Machine Translation Patent, Experts React, May 24, 2016

[2] Peter Corless, Facebook, Google and the Future of Neural MT, June 2016

[3] Rachel Metz, Facebook Plans to Boost Its Translations Using Neural networks This Year, May 23, 2016

[4] Gino Dino, Confirmed: Deep Learning Is Coming to Google Translate, March 17, 2016

[5] Neural Machine Translation by LISA, University of Montreal, 2014

[6] Chris Woodford, Neural networks, March 18, 2016

[7] Steven Max Patterson, Startup Nervana joins Google in building hardware tailored for neural networks, May 31, 2016

[8] Khari Johnson, Facebook wants chatbots to learn the way people do, June 16, 2016